QnA Maker Lessons Learned + Limits Infographic

General Limits

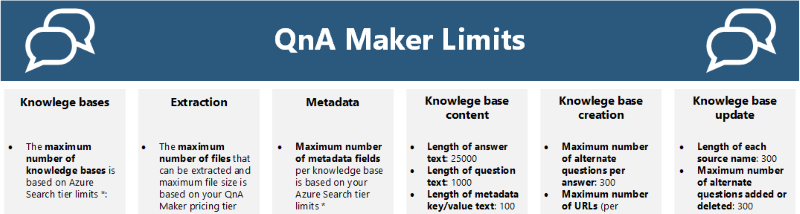

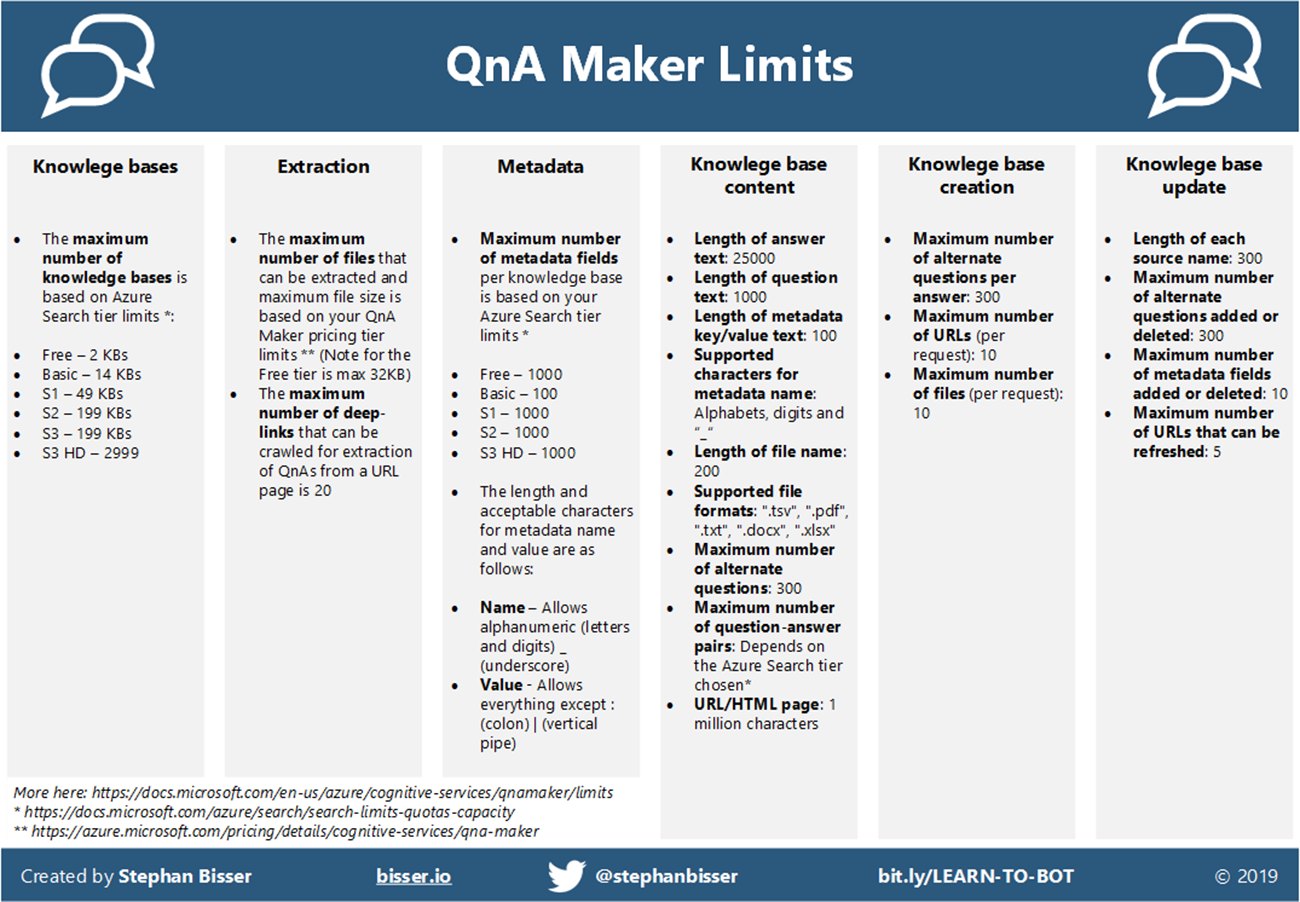

So as I work a lot with one of the most common used Cognitive Services when developing a bot, the QnA Maker, I wanted to share some insights in terms of limits of the QnA Maker as I detected some things by now. So if you have never seen it before, this docs article outlines the various limits you need to have in mind when working with the QnA Maker. So the QnA Maker team did a pretty good job in outlining the limits for the various components:

- Knowledge bases

- Extraction

- Metdata

- Knowledgebase content

- Knowledge base calls

- Knowledge base updates

If you look at the docs article above, you’ll probably think that is a lot of information so I decided to do a quick infographic on the limits to be used for presentations and so on. So here it is:

Feel free to use it for internal use in your presentations or documents. The only point I ask you to do is to credit me and link this article to your documents where you use it. Please do not sell this as your own 😉

Lessons Learned / Notes from the field

While writing this post, I thought about the projects I did with QnA Maker. This led me to the idea of adding some of my personal lessons learned while using QnA Maker as well, to give you some ideas about the best-practices and notes from the field as well.

Editors / Content Creators

When dealing with dynamic and domain specific content, you as the “AI architect” can never be the one, implementing the services (chatbot, LU models, supporting systems, …) AND take care of the content which is surfaced by the bot residing in knowledgebase based on QnA Maker. During my projects, I made the expirience, that it is always a good idea to onboard the individuals who are domain experts in the project and assign them the role of what I call “content creators” or “editors”. Those people typically have profound knowledge in the given domain and are the ones who should be managing the knowledgebase content as well. But you should always decide on the path those editors will be updating the knowledgebase contents. For example you could use files like Excel sheets or PDF files stored in SharePoint and just sync them to the KB (which is described here). This enables you to let the editors do updates on the QnA pairs, but you still have the ability to manage the QnA Maker service.

Architecture / Design

First of all, when working with QnA Maker in a chatbot scenario, it’s always good to start outlining the data sources used for your knowledgebase(s). Because this can have a huge impact on the design of your QnA Maker as well as your general bot architecture. If you decide on adding public URLs for example, take a look at how many QnA pairs you will end up using. Because if you are using the QnA Maker free tier, there is the limit of 32KB in file size for the file where the URLs are stored. In my case these were approximately 250 - 300 URLs which I could store in one single knowledgebase. If you need more URLs to be stored in a knowledgebase, you can either shorten the URLs ore split them up into two seperate knowledgebases within the same QnA Maker instance.

But, then you would need two things to make your scenario to work properly:

- Group QnA pairs of the same/similar topic into the same KB

- Use Dispatch to route to the correct QnA KB to respond with the correct/best answer

Dispatch

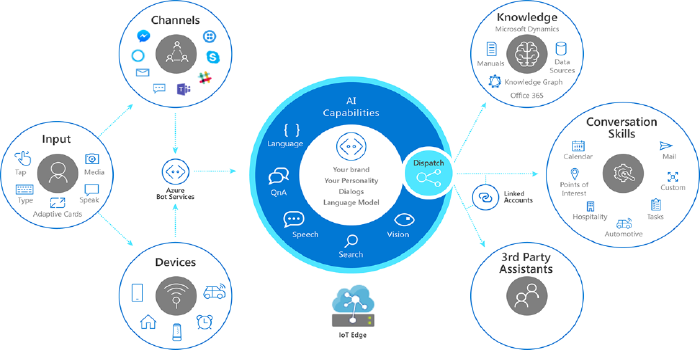

Dispatch is a tool/service supporting the language understanding part of your solution. It can mainly be used to route messages to the correct consumer. The Dispatch service itself is a CLI tool, which accepts LUIS applications/models as well as QnA Maker KB as inputs and chains/aggregates them together into one single LUIS app (= the Dispatch LUIS app). This Dispatch LUIS app is then used as a first touchpoint for your solution, mostly a bot, to check where to go in terms of language understanding.

Dispatch is a great tool helping you to combine QnA Maker KBs and LUIS apps in a single solution, to enhance the languange understanding part of your solution/bot. Furthermore, when using more than 1 QnA Maker KB, it can make sense, to use Dispatch as a “routing” service, to distribute requests to different KBs in the backend, without the need to impleement a custom routing solution.

Multiturn Prompts

If you plan to implement dialogs in some sort into your bot without actually writing code, you can use a feature in the QnA Maker service, which is called “Multiturn Prompts”. This feature enables you, to define follow-up prompts for every QnA pair in your KB. This enables you to define a question, as well as a follow-up question if the question has been asked by the user and answer has been delivered by the bot.

This makes totally sense, if you plan to implement what I call “lightweight dialogs” and don’t want to write code and develop the dialogs in your code base. But always remember, that you are limited in terms of flexibility when using this feature. This is just designed to ask one or more question after the bot has given a response. It is not intended to replace the complete dialog management system offered by the Bot Framework. So I’d recommend to use it in a scenario where you have a given sequence of questions you want to ask based on a first question, but you should always check which parts of your dialog tree you want to be using this feature and which parts need more features (like validation or something more specific) where you would need to use code to accomplish these requirements.

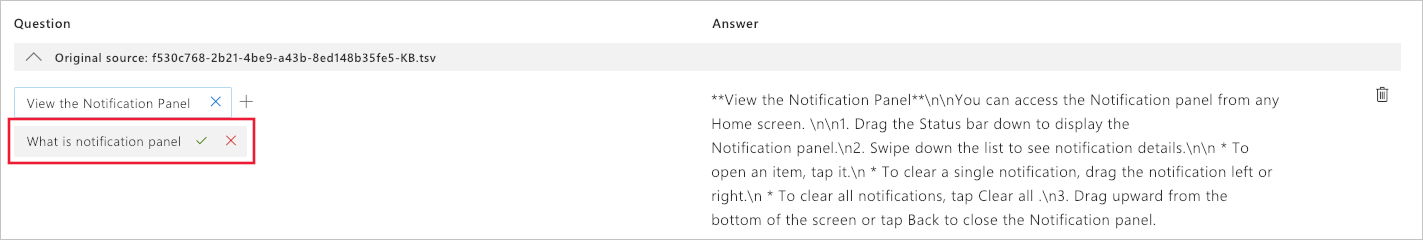

Active Learning

Whenever you build a ML solution it is obvious that you need to continously refine the ML models. That’s also true for the language understanding models and QnA Maker KBs used for your chatbot. When using QnA Maker within your bot, you should always keep in mind to setup a process of iterating over the KB content and update the QnA pairs, to cover a wide range of potential user questions. To support this, the QnA Maker team has added a featured calle “Active Learning” to the service, which basically gives you as KB admin/editor feedback on question alternatives which you could use to improve the QnA KB content quality. In production this will look like this:

Feedback

App Insights

When developing bots, you should always provide some kind of user feedback mechanism. This can help you refine the bot to increase user adoption. Same is true for the QnA Maker KB. A good way to make use of the users’ feedback is to add Application Insights to your chatbot and QnA Maker solution. This can help you find out the top trending questions to see which QnA pairs are the most commonly used. But you can also get insights on the questions which could not be answered by the service. So with this in place you can build fancy using Application Insights query functionality which you can then give to your editors and KB admins to look at in a schedule.

Continous Improvement

Another important topic when using ML based services is to continously improve the models & services. It is not a one-shot thing to do, but requires you to iterate over the services and refine them. This helps you delivering the best possible user expirience and keeps the user adoption at a high level. One thing I would recommend is to let the editors work in iterations to adapt the KB content by means of the users’ feedback and the Active Learning suggestions they get. This will make sure you continously improve your KB and the content in there is up to date.

Automation brings advantage

As stated earlier, if you plan on separating the QnA Maker service admins from the KB editors, it is a good way to use files stored in SharePoint or on your public website which are maintained by the editors in the specific source system without touching the QnA Maker KB. If you go for such an approach you need to have a way for automating the test and publish phases for the QnA Maker KB. This can for example by done using Azure Logic Apps or Azure Functions, which continously run and hit the “Save & Train” endpoint of your KB followed by the “Publish” endpoint. As the QnA Maker itself is just an API you can hit, there are a lot of API endpoints provided, so if you plan to automate that part, simply take a look at my QnA Maker Postman Collection to see which endpoints are available.

Summary

Summing up, QnA Maker is a great service to use for chatbots, which should be capable of dealing with FAQs. But in production, I think the best solution design is to combine QnA Maker (as it is itself one part of the “Language” area for Cognitive Services) with other services like LUIS to get the best out of it. But I would always answer the question “Is QnA Maker ready to be used in an enterprise scenario?” with yes, due to the fact that you can establish security & compliance rules and you are the owner of your data stored in there.

So if you plan on using QnA Maker, please take a look at the infographic above showing the limits of that service and you should be good to go…