Exploring Autonomous Agent Capabilities with Microsoft Copilot Studio

Introduction#

Microsoft is pushing the boundaries of how business processes can be automated with its Copilot Studio—a cloud-based, low-code platform that empowers organizations to build AI agents. In its latest release, Microsoft has introduced autonomous agent capabilities aimed at enabling agents to proactively respond to events, orchestrate tasks, and integrate seamlessly with enterprise data sources. However, while these functionalities open exciting prospects, in my opinion, they currently feel quite basic—likely reflecting their preview status.

Autonomous Agent Capabilities at a Glance#

With Copilot Studio, Microsoft now provides tools to build autonomous agents that can:

- Monitor & React: Automatically respond to business signals or triggers to initiate tasks.

- Execute Business Processes: Leverage AI orchestration to run rule-based workflows and automate repetitive tasks.

- Integrate with Data Sources: Connect to Microsoft Graph, Dataverse, and other connectors to pull in context.

- Enhance Productivity: Offer a low-code way for teams to extend Microsoft 365 Copilot with personalized AI agents.

Fundamental Architecture Diagram#

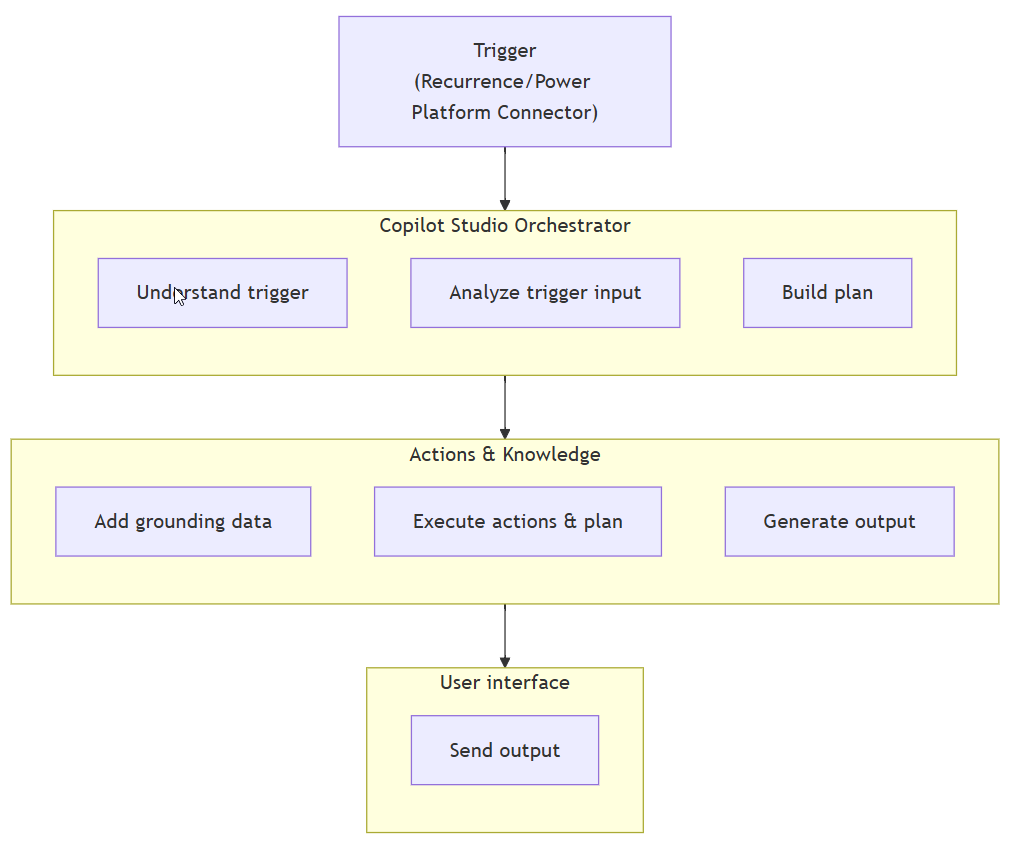

A core element of understanding autonomous agents in Copilot Studio is their underlying architecture. Below is a diagram which displays a visual representation of the system:

Figure 1: Fundamental Architecture for Autonomous Agents in Microsoft Copilot Studio

This diagram illustrates how user interactions are processed through Copilot Studio, which utilizes an orchestration engine to understand the trigger and inputs along with building and executing a plan. The agents then access context-rich data from secure sources to generate responses.

The Preview Nature: Opportunities and Limitations#

While the preview release is promising, the current autonomous agent capabilities appear somewhat basic:

- Limited Customization: The workflow options and decision-making granularity are still evolving.

- Early-Stage Integrations: Integrations with external systems work at a basic level—expect enhancements with additional connectors and refinements.

- Dependence on User Feedback: Early adopters find that while the automation framework offers efficiencies, further adjustments and human oversight are needed to handle complex real-world scenarios.

These observations suggest that the preview is an important first step, setting the stage for further refinements as Microsoft iterates based on customer feedback.

Looking Ahead#

Microsoft’s vision for transforming business processes with AI is clear. As additional features and deeper integrations are developed, we can expect:

- More advanced decision-making capabilities.

- Enhanced automation for complex workflows.

- Greater interoperability across the Microsoft ecosystem.

Even though the functionality now might seem basic, it represents a foundational step towards a future where AI agents can autonomously handle a wide array of business tasks with minimal human intervention.

What do you think about the current state of autonomous agent capabilities in Copilot Studio? Do you see this basic functionality as laying the groundwork for a more advanced, fully autonomous future?